Being able to group similar documents together is useful .. especially if it can be done automatically.

Why is this useful? Some reasons include ..

- Finding useful documents based on one you know is useful.

- Finding documents that are similar to one that you found bathing a keyword .. where the similar don't have that keyword, but are still similar.

- Automatically organising a medium-to-large collection of documents to help quickly understand what the corpus is about, and maybe prioritise which documents you look at further.

- ....

Ok that sounds good .... how do we go about doing that?

Word Similarity

Well, we've already done the pretty amazing work for finding

words (not documents) that are related or similar.

We did that based on co-occurrence .. which meant that words could be related, even if the two words themselves didn't co-occur in the same document themselves. They'd be related because they shared other words which co-occurred. Have a look at the following to remind ourselves how

car and

van are related, even though they don't co-occur directly.

Do we want to do this for documents? Could we?

We can't directly. Documents don't have the same idea of co-occurrence with other documents. Well, not in a useful way.

So we have to roll back to first principles again.

Document Similarity?

How do we want to construct the concept of document similarity? Yes, we have the power to invent it ourselves!

Let's start with the most obvious idea ... similar documents will have common words.

That is simple - and makes intuitive sense. Documents about cars will all talk about wheels, seats, paint, etc. The more words they have in common, the more similar they are.

We could try to come up with even more sophisticated schemes like ...

- documents are similar if the words they contain have a high co-occurrence score with words in the similar document .. so documents about cars are considered similar to documents about vans.

- documents are similar if the distribution of word counts is similar ... so the distribution is kind of like a fingerprint

- ...

But as usual, let's start simple first .. and only get more complicated if we really need to.

Simple Document Similarity

That simple idea of documents being similar if they contain common words should be easy to implement in code.

Remember, we've already got a matrix of word frequency per document. If we simplify those word frequencies to a binary yes/no .. a 0 or 1 .. we can then match up words that occur in more than one document.

The following shows this simplification of word count to yes/no or 1/0. You can see that any word count value above 0 gets turned into a 1, and 0 stays 0.

If you look at the above diagram for a few moments, you can start to see what the documents might be about:- doc1 and doc2 seem to be about cars as they both contain wheel and seat.

- doc3 does't seem to be about cars.

- doc3 seems to be about cake.

- doc1 and doc2 are definitely not about cakes.

- but doc3 mentions lights .. maybe candles on a cake, rather than car headlights?

You can start to see how

doc1 and

doc2 are similar.

More Similar?

But is

doc3 similar to

doc2 because they both mention

lights? Surely not to the same extent as

doc1 and

doc1 are similar because they're about cars. How do we encode this notion that

doc1 and

doc2 are more similar than

doc2 and

doc3?

We could add up the number of "hits" or "matches" ... as shown below:

That certainly shows that doc1 and

doc2 are more similar than

doc2 and

doc3. Job done!Tiny Bit More Sophisticated?

Do we need to make this more complicated by using the actual word counts, rather than the simplified 1's and 0's?

Let's try it and see what happens ... if we use those word count numbers and multiply them ... kinda like we did with word similarity and co-occurrence scores.

That actually looks like an improvement. The larger numbers boost the similarity scores. We now have

doc1 and

doc2 as very similar ... and

doc2 and

doc3 and very slightly similar.

That's what we want - so let's keep this approach!

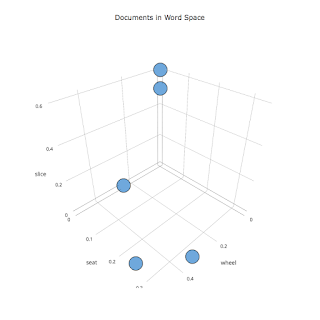

Those Vectors .. Again!

Those multiplications look very similar to the calculations we were doing for

word-word similarity ... when we considered words as vectors in a space of co-occurrences.

This time we have

documents (not words) in a space where the dimensions are the

word counts (not co-occurences).

Look again at the above diagram .. imagine we have a new document ... it will sit in the vector space and be positioned according to the words it contains .. and how often those words occur. So that similarity calculation where we multiply the word counts ... we've already seen that before, it was just like taking the dot product of the two things being compared. So here we have:

$$\mathbf{similarity} = \mathbf{doc1 \cdot doc2}$$

Remember that this dot product is a measure of the angle between two vectors $\mathbf{a \cdot b} = |a| \ |b| \ \mathbf{cos}(\theta)$. If the vectors are normalised to length 1, this simplifies massively to $\mathbf{a \cdot b} = \mathbf{cos}(\theta)$.

A smaller angle means the vectors are more aligned, and $\mathbf{cos}(\theta) \to 1$ ... a bigger angle means they are point in very different directions and are not similar, and $\mathbf{cos}(\theta) \to 0 \to -1$. Let's update our diagram to show this:

Just like with word-vectors ... you can see that similar documents will point in similar directions .. the direction being influenced directly by the word content of those documents.

Do we really want to normalise those vectors? What do we lose if we do?

If a document has a very large word count for

cake, for example, then the vector will be stretched along that axis quite a long way, which will effect the angle it makes with a document with a smaller

cake word count. So we don't lose the angle information. And anyway the angle doesn't change massively as word count changes. So we don't lose much and we gain much simpler calculations.

Cool - so let's crack on and see what the results are!

Mixed Corpus

To find similar documents in a corpus, and to group them (also called

clustering) .. we should have a corpus that does actually contain groups of different kinds of documents. At least for testing these ideas .. .in the real world we won't know what a corpus contains.

A mixed corpus can be made by taking a small set of documents from existing corpuses, each of which are centred around a theme .. e.g. recipes, clinton emails, shakespeare .. That is a quick and easy approach ...

The new mixed corpus lives at:

A Little Refinement - Word Frequency not WordCount

Previously we've realised that word count on it's own can bias towards larger documents, and unfairly penalise shorter documents which will never have high word counts.

To mitigate this we used word frequency - that is word count divided by the number of words in a document. We'll do that here too.

Results

Listing the document pairs in order of descending similarity we hope to see documents that come from the same original corpora as being similar to each other.

That is - we hope to see documents about recipes being most similar to other documents about recipes ... and sections of Macbeth similar to other sections of Macbeth ...

Let's see what we actually get. The following is a snipper of the full 1326 rows of the similarity pairing:

doc1 doc2 similarity0 11.txt 10.txt 1.0000001 10.txt 06.txt 0.8437392 10.txt 08.txt 0.8272473 10.txt 07.txt 0.8105824 12.txt 10.txt 0.8093475 12.txt 11.txt 0.7912456 11.txt 06.txt 0.7818097 the-report-of-the-iraq-inquiry_section_annex-5... 10.txt 0.7781678 10.txt 05.txt 0.7683229 11.txt 08.txt 0.75400410 10.txt 03.txt 0.75136311 12.txt 03.txt 0.74440612 12.txt 06.txt 0.74415613 12.txt 08.txt 0.74258014 06.txt 05.txt 0.73075915 macbeth_act_01_scene_01.txt 10.txt 0.71730916 10.txt 02.txt 0.70920017 11.txt 07.txt 0.70176218 C05739567.txt C05739545.txt 0.70093219 C05739567.txt C05739559.txt 0.68812520 the-report-of-the-iraq-inquiry_section_annex-5... 11.txt 0.68657121 11.txt 03.txt 0.68436622 13.txt 12.txt 0.67244123 08.txt 06.txt 0.67107224 06.txt 03.txt 0.67043425 11.txt 05.txt 0.66594326 13.txt 10.txt 0.66506827 10.txt 01.txt 0.66291728 the-report-of-the-iraq-inquiry_section_annex-1... 10.txt 0.66012429 11.txt 09.txt 0.652069... ... ... ...70 08.txt 01.txt 0.56132071 the-report-of-the-iraq-inquiry_section_annex-1... 06.txt 0.56024072 the-report-of-the-iraq-inquiry_section_annex-5... the-report-of-the-iraq-inquiry_section-11.txt 0.55982173 09.txt 08.txt 0.55899774 C05739559.txt C05739547.txt 0.55628175 the-report-of-the-iraq-inquiry_section-12.txt 11.txt 0.55627976 13.txt 08.txt 0.55559977 09.txt 03.txt 0.55479378 03.txt 02.txt 0.54981679 the-report-of-the-iraq-inquiry_section_annex-5... the-report-of-the-iraq-inquiry_section-20.txt 0.54891480 the-report-of-the-iraq-inquiry_section-11.txt 06.txt 0.54866181 12.txt 02.txt 0.54781682 the-report-of-the-iraq-inquiry_section_annex-5... macbeth_act_01_scene_01.txt 0.54539583 the-report-of-the-iraq-inquiry_section-32.txt 10.txt 0.54482684 07.txt 05.txt 0.54445685 11.txt 04.txt 0.544003......Looking at the above, and the rest of the list, we can see:

- The documents most similar to themselves are the recipes. That's because they share loads of words amongst each other.

- You may notice that there are no documents most similar to themselves .. that's because the code uses itertools.combinations() which excludes same-same combinations .. which works for us! (we're not interested in the obvious fact that a document is perfectly similar to itself)

- The results don't perfectly separate into neat groups, the documents we already know are from distinct corpora. For example the 8th row shows a document from the Iraq inquiry being similar to a recipe.

Let's try visualising the results .. which might help us see the big picture, rather than focus on the individual odd matches.

Visualising Document Similarity

The graph of connected nodes that we used before seems like a good starting point for visualising document similarity.

We do need to change it so that the most similar documents are clustered together in the graph. That means the links need to attract the document nodes. We'll do more refinement in the next post part 2/2 .. but for now we can apply the following change to the visualisation javascript d3.js code:

var simulation = d3.forceSimulation(graph.nodes)

.force("link", d3.forceLink(graph.links).distance(function(d){return 10 / d.%%edge_attribute%%;}))

.force("charge", d3.forceManyBody().strength(-20))

.force("radius", d3.forceCollide(15))

.force("center", d3.forceCenter(width / 2.0, height / 2.0));

Previously all the links in the graph had a constant "normal length" (before being subject to forces) .. we now use the similarity score to make strongly similar documents tend to have shorter links.

Let's see what happens if we visualise the top 7 similarities (excluding the Iraq document):

This is nice! It may not look like much but we have automatically .. without any human reading .. identified a bunch of documents from a mixed set .. which are similar to each other. We can confirm this worked because we actually prepared the mixed corpus .. and this first group .. or cluster... are all Italian recipes documents!

Success!Let's add more documents to that visualisation .. let's up the number to .. say .. 50 similarities ..

There are two distinct clusters here. We couldn't easily see that by looking at that list of doc1-doc2 similarities.

That's the power of visualisation!But that second cluster is mixed .. it seems to have both Iraq report documents and Italian recipes in there... we need to investigate further.

Before we pause and investigate further ... let's have a look at what the top 150 links looks like.

There's a similar story here... 2 distinct clusters ... one is fairly pure .. that was the one with the top-most similarity scores .. and the second cluster is mixed ...

Conclusion and Next Steps

Some definitely cool progress:

- we've developed a simple idea for document similarity, and it seems to work

- visualisation is much better as showing clusters than a list of numbers

- ... there similarity method doesn't work perfectly so needs some investigation ...

Next steps are:

- investigate to see why the similarity method doesn't work better - e.g. why Iraq docs are calculated as similar to recipes

- investigate to see if we can further improve visualisation