Instead of a black-n-white yes-or-no .. we now have a spectrum of co-occurrence for words inside a window. This allows related words that do occur frequently together, but may be placed a couple or more words apart, to be recognised as such.

The results looked good .. apart from the problem of boring stop-words which also happen to be very co-occurent.

We've previously developed a way to deal with such boring words by developing a measure of word relevance .. which balances word frequency, commonality across documents, and word length. Such a method won't be perfect, but it'll be better than a brutal application of a word stop-list which can't adapt to each corpus.

Let's see how it works.

Combining Word Relevance & Co-Occurrence

How do we combine these two indictors .. co-occurrence and word relevance? Well, there are a few ways we could do it:Option 1: Remove low-relevance stop words first, then calculate co-occurrence.

Option 2: Calculate co-occurrence then remove pairs not in most relevant list.

Option 3: Multiply co-occurrence scores with relevance scores to get a new measure.

How do we choose?

Option 2 seems inefficient as we'd end up building word-pairs and calculating co-occurrence for words which we would later discard. For large datasets that would turn into significant waste and time.

Option 1 is the simplest. Option 3 could work, but as always, we prefer to try simpler things first.

Results

We've tweaked the javascript d3 code that creates the network graph plots:var simulation = d3.forceSimulation(graph.nodes)

.force("link", d3.forceLink(graph.links).distance(50))

.force("charge", d3.forceManyBody().strength(-20))

//.force("radius", d3.forceCollide(15)) .force("center", d3.forceCenter(width / 2.0, height / 2.0));The length of the links has been increased to make crowded networks clearer. The repulsion between nodes has been reduced to prevent them flying off the edge of the display! We tried but didn't use the enforcement of a collision radius, which effectively ensures nodes don't get too close .. it ended up creating a regular and unnatural pattern.

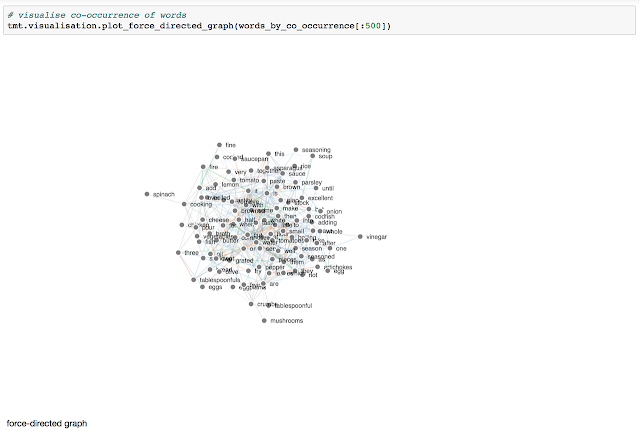

The following shows the network of nodes connecting co-occuring words (with window 2) which were in the top 100 most relevant for this (small) Italian Recipes dataset. It's quite crowded .. and does still contain some stop-words. If you play with it, you can explore some meaningful connections.

The next plot shows only those nodes with had a weight of more than 3.5 (we tried a few other values too).

It really brings out the key meaningful word pairings ..

- grated cheese

- tomato sauce

- pour over

- olive oil

- bread crumbs

- brown stock

That's a lot of success for now! Great job!

Next Steps - Performance Again

We applied the above ideas to a small dataset ... we really want to apply them to the larger data sets because co-occcurrence with a stretched window doesn't really become useful with small datasets.However, the performance of indexing and calculating co-occurrence is slow .. so next we need to investigate this and find a fix!

No comments:

Post a Comment