The topic is called Latent Semantic Indexing .. or Latent Semantic Analysis .. or Dimensionality Reduction .. all horrible phrases! That last one looks like a weapon from Dr Who!

Let's start with a super simple example...

Talking About Vehicles and Cooking - Without Using Those Words

Imagine we have some documents that talk about vehicles, and another set of documents which talk about cooking.But - and this is important - imagine they never use the word "vehicle" or "cooking" ... instead they talk about specifics like wheel, seat, engine, slice, oven, boil ...

Here's a word-document matrix that shows how often those words occur in our documents.

You can see how 3 documents talk about vehicle stuff .. but don't mention the word vehicle. And you can see that the remaining 3 talk about cooking stuff ... but don't mention the word cooking.

And we know that we should be using word frequency to take account of bias from long or short documents:

That's all nice and easy so far ... we've done this loads in our previous posts.

Two Questions

Looking back at the documents and their words above .. we can ask 2 really good questions:- How do we visualise those documents .. with many dimensions (words) .. on a 2 dimensional plot .. so that I can see them clearly and usefully on my computer or on a book page?

- How do I extract out the underlying topics (vehicle, cooking) ... especially when the documents don't actually mention these more general topic names specifically?

You can see that these are actually good questions - if we could do them, we'd have very powerful tools in our hands!

In fact .. the solution to these two questions is more or less the same! Let's keep going ...

Can't Plot A Billion Dimensions!

Let's step back a bit ... and look at that first question.If we wanted to compare documents to see how near they were to another one based on their word content, we'd have some difficulty. Why? Because the axes of that plot would be the words (wheel, seat, boil, etc) ... and there are loads of those ... too many to plot meaningfully.

If we had a vocabulary of only two words (wheel, boil) then we could plot the documents on a normal 2-dimensional chart .. like the following, which shows 6 documents plotted in this word space.

By limiting ourselves to 2 dimensions .. 2 words (wheel, boil) we miss out on so much information about those documents. Documents which appear far apart might actually be close if we looked at other dimensions .. if we had chosen other words instead.

So brutally cutting out only 2 dimensions of the word-space we've severely limited the information that view contains .. and it could even be misleading.

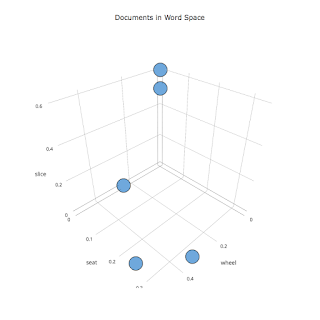

A 3-dimensional view isn't much better ... the following shows 2 dimensions, representing 3 words (wheel, slice, seat).

So what can we do? Even our simplified documents above have only a vocabulary of 6 words ... and we can't plot 6 dimensional charts on this display or paper.

We clearly need to reduce the number of dimensions ... but do so in a way that doesn't throw too much information away .. a way that preserves the essential features or relations in the higher dimensional space.

Is this even possible? Well .. sort of, yes... but let's work through our own simple documents to illustrate it.

From 6 Dimensions .. Down to 2

Look again at the 6 word vocabulary that our six documents are made of .. wheel, seat, engine, and slice, oven, boil.You can see what we already knew .. there are actually 2 underlying topics ... vehicle and cooking.

The first topic vehicle is represented by the three words wheel, seat, engine .. similarly, the second topic cooking is represented by the words slice, oven, boil.

So .. maybe .. we can reduce those 6 dimensions down to 2 dimensions ... with those 2 axes representing the 2 topics vehicle and cooking?

That would be a useful thing to do .. not just because we're making it easier to plot ... but because those 2 topics are a good generalisation of the topics represented by the many more words in those documents.

How do we do this? How do we use those word frequency numbers?

Well .. we could invent all sorts of ways to combine those 3 numbers for wheel, seat, engine into 1 for vehicle. As usual, if simple works we should prefer it.

Let's start with simply averaging those numbers:

We've cheated here because we know which words to associate with each topic .. we'll come back to that later .. for now we're illustrating a different point about reducing dimensions.

Let's see what the plot now looks like:

Here we have 2 clear topics ... which is a simpler, distilled, view of the 6-dimensional original data.

Topics, Not Words

Ok - so we've used a rather constructed example to show how we can reduce lots of dimensions down to a smaller number .. like 2 here.That answers the first question we set ourselves .. how do we visualise high dimensional data.

That second question was about how we extract out topics ... topics which are represented by several or many other words in documents. We've just seen how that extraction can happen.

By combining words, by averaging for example, into a single number .. we can extract out topics. Those topics won't be named by this method .. but will tell us what that topic entails.

That's fantastic! And pretty powerful!

Imagine being able to extract out the top 5 topics from a corpus ... and even if we don't have names for each of those topics .. we will know what they're made of ... For example, a topic T made of words apple, banana, orange, kiwi .. probably tells us that the topic T is fruit.

Next Time

That's cool .. we've asked some interesting questions, shown the problem of visualising and understanding high dimensional data .. and one suggested way to reduce dimensions to extract out more general topics.... but the only thing left to work out is how to choose which words form a topic .. that's the next post!

No comments:

Post a Comment